The territory of Athens was being ravaged before the very eyes of the Athenians, a sight which the young men had never seen before and the old only in the Median wars; and it was naturally thought a grievous insult, and the determination was universal, especially among the young men, to sally forth and stop it. Knots were formed in the streets and engaged in hot discussion; for if the proposed sally was warmly recommended, it was also in some cases opposed. Oracles of the most various import were recited by the collectors, and found eager listeners in one or other of the disputants. Foremost in pressing for the sally were the Acharnians, as constituting no small part of the army of the state, and as it was their land that was being ravaged. In short, the whole city was in a most excited state; Pericles was the object of general indignation; his previous counsels were totally forgotten; he was abused for not leading out the army which he commanded, and was made responsible for the whole of the public suffering. He, meanwhile, seeing anger and infatuation just now in the ascendant, and of his wisdom in refusing a sally, would not call either assembly or meeting of the people, fearing the fatal results of a debate inspired by passion and not by prudence.Let's imagine the range of policies that the Athenians could have adopted. Trivially, they range from "arm everyone and sally immediately" to "surrender now;" Thucydides implies that the relevant policy range is from the Archarnians' ideal point ("send as many men as necessary to secure the immediate neighborhood") to Pericles' ("wait until the situation has resolved itself"). Under the Athenian constitution, presumably, Pericles has the right to call the assembly to adopt a new policy, but he knows that in the heat of the moment they will adopt a policy much closer to the Acharnians' ideal point than to his. Pericles suspects that over time Athens' allies will reinforce him, and at the same time that the Spartans will tire of offering battle without a response. The greater strategic flexibility of the Athenian navy also (he believes) offers him the ability to choose when and where to strike at the Peloponnesians and their allies, whereas giving battle to the Spartans outside of Athens risks everything. The ability to control the agenda of policy is crucial but it must be nerve-wracking to exercise. Thucydides captures the dilemma here well. Over time, Pericles's policy (in this instance) will be proven to be the correct one, but implementing it both requires bearing immediate costs and a reliance on the formal institutions of Athens. Had the policy failed, it would have failed catastrophically, with Pericles removed by irregular means and Athens itself in jeopardy.

26 December 2012

Structure Induced Equilibria in Everything, Peloponnesian War Edition

From The History of the Peloponnesian War:

26 September 2012

I'm Happy To Bash Woodrow Wilson

|

| America's worst postbellum president. |

"In a sense, political science and political events have passed the adherents of 'responsible parties' by. It may seem foolish to waste time now on their mistake of expecting a clear binary choice. Still, we can understand their mistake a little better today. It is not simply an empirical matter--as Dahl, for example, argued--that the American (or any other) system does not work in the neat way the Wilsonians wanted. More important is the fact that what they wanted was itself morally wrong from a liberal democratic view, because to get binary choice one must enforce some method of reducing options. This is precisely the coercion involved in populist liberty. The populist expectation of a coherent program is not attainable by weaving together individual judgments, either for a small group or for a large nation. To attribute human coherence to any group is an anthropomorphic delusion. Worse, however, the populists expected to calcify politics on their own issues, progressivism or the New Deal. That is fundamentally unfair. Winning politicians are, of course, happy to stick with the issues on which they are winning. Losing politicians are not so entranced with that future. The losers of the last election become the winners of the next by changing issues, revealing new dimensions of choice, and uncovering covert values in the electorate."

Will Riker was not only a damn fine first officer, he was a damn fine political scientist. And passages such as this one should both refute the quantoid delusion that our work is value-free and the normativist's delusion that he may be uninformed about the work his formalist colleagues engage in.

30 July 2012

My lectures gone by, I miss them so

I'm changing this year's IPE lectures a bit to downplay the details of negotiation and to bring interest-group explanations for protectionism to the fore. But that meant that I had to lose half of one lecture, which was a lot fun--not least because the last time I taught it, I delivered the lecture just as the budget shutdown was about to take place. And so no more prize fights...

I should note that this was my favorite slide of the semester largely because it broke every rule I have for my slides. (Well, all but one: I only use hi-res jpgs in designing my slides.) Garish colors? Check. Weird fonts? Check. More than two fonts in one slide? Check. Absolutely zero information conveyed? Check. And although you can't tell from the screen grab, the various elements of the slide enter in via every terrible transition feature that Apple has to offer--explosions, dropping with a thud, and maybe even the lens flare.

Given that I created something like 500 slides for the course, it was a lot of fun to have one intentionally terrible moment ...

I should note that this was my favorite slide of the semester largely because it broke every rule I have for my slides. (Well, all but one: I only use hi-res jpgs in designing my slides.) Garish colors? Check. Weird fonts? Check. More than two fonts in one slide? Check. Absolutely zero information conveyed? Check. And although you can't tell from the screen grab, the various elements of the slide enter in via every terrible transition feature that Apple has to offer--explosions, dropping with a thud, and maybe even the lens flare.

Given that I created something like 500 slides for the course, it was a lot of fun to have one intentionally terrible moment ...

29 July 2012

Q. Are we not researchers? A. We are professors

|

| I've been thinking about 80s music a lot lately. |

Why should the public pay taxes to support our research?

This used to be an academic question, but officeholders like Tom Coburn and Jeffrey Flake have put the question squarely before political scientists. Other disciplines have faced this moment before, as physics did in the late 1980s and early 1990s, and still more will face it in the future. My reasoning is plenty motivated, so I think that the case for funding basic academic research is pretty good. Like a lot of social scientists, I'm frustrated by the often self-inflicted lack of solidarity among academics ("Which social science should die?") and the professoriate's inability to sustain a campaign to make our arguments. Even the political science blogosphere's concerted arguments in favor of NSF arguments probably did less to move the needle than one foolish New York Times op-ed.

The outlook for funding for the social sciences and academia more generally is a lot dimmer for my generation than it was for the two or three generations of academics before us. At worst, they had to look forward to long periods of stagnation (unless you were a Sovietologist); at best, you could go from nothing--literal non-existence--to a seat at the grown-up table in the time it takes some disciplines' doctoral students to finish a dissertation. As the funding pool dries up, there's bound to be a lot more friction and fighting among academics than there was when the only question was how to divide up the increasing pie.

The situation won't get better on its own. I'm unconvinced by the idea that state governments' spending will naturally pick back up as the economy does, since it relies both on the idea that the economy will pick up--green shoots!--and also that politicians will refrain from directing that savings from their structural adjustments toward other priorities. And even if it does, higher education is under increasing pressure to justify its business model in every dimension:

- Why should college be a physical location, and not an MMORPG-cum-TEDtalk?

- Why should professors be hired and promoted based on their research skills--most without ever having had any practical or theoretical training in pedagogy--when the public's understanding of funding universities rests on their teaching productivity?

- Why should there be an expectation that tuition should rise faster than inflation?

- Why should an English degree take as long to complete and cost the student as much as a physics or an engineering degree?

At root, we largely rely on one of two arguments to answer all of these questions. The first is that the knowledge produce is a public good, and that institutions are organized to support our knowledge production for the maximal good of society (or, at least, that reform would cost more than it would gain--that we are in a stable local maximum). The second is that our research leads to better learning outcomes for our students. Winning the future, then, requires academics to have maximum time for research, even if there are short-term tradeoffs for the students.

I think the first answer is better, since it does at least appeal to the innate beauty of knowledge, but it tends to obscure the third reason that we voice silently--that learning, like art, is a luxury good. We can't say that aloud, since it carries with it the implication that opposition to learning is opposition to civilization itself, and that the superior culture is the more refined culture. That is a line of argument that has never played well with the citizenry, and these days would not play well with the mythical one percent.

The second answer--better research, better students, better jobs--is, to put it bluntly, not overly persuasive. It is, in fact, close to being faith-based. We expect better evidence and stronger argumentation from our students when they turn in term papers than when we produce public justifications of our budgets. Why should we ask students who want to be lawyers to sit through four years of an undergrad degree and then three years of law school when the British and others manage to produce serviceable advocates after three years of law-focused undergraduate studies? And much of the other justifications for our teaching--our students learn critical thinking! statistics! data visualization! discipline!--only beg the question of why students should learn those skills by majoring in our subject instead of majoring in something more practical in which those skills are foregrounded.

There are answers, of course. One part is that it's that the ivory-tower academics are not the ones who are out of touch with the real world. Rather, frequently it's our critics who deride us for not taking "real-world" problems seriously enough who have no idea what the real world is actually like. This sounds counterintuitive, but sober reflection or residence in Washington (or Jefferson City, or Albany, or wherever legislators gather) will quickly remind you that politicians and activists who make these claims frequently have no idea about what either academics or businesspeople or bureaucrats actually do. The "soft skills" matter, and those are hard to impart at a distance or by paying professors less than a living wage. As Tim Burke, the charge of know-nothingism applies with especial force to fact-free discussions about information technology and teaching.

A second part would be to forcefully stress how social science matters by discussing our research. This is easy in the abstract but harder concretely. Yes, individual scholars and individual projects make tangible contributions, but how many of us are willing to say there are scholarly consensuses about issues in social science as there are in comparably observational sciences like geology? Where we are most consensual, we are least interesting; where we are most interesting, we are least consensual--and often (as with the democracy-promotion agenda of the Bush administration, or the debate over whether there is a resource curse) most dangerous. So we're left with the unsatisfying choices of either "teaching the debate" or picking sides among competing findings where there is real and lasting disagreement. (One way of squaring the circle is to find and promote popularizers of our research--popularizers in the real Carl Sagan or even Stephen Jay Gould tradition--and let them handle the rhetorical aspects.)

But the third part is to recognize that we should probably systematically invest in and require better teaching and measurements of teaching effectiveness. We measure research productivity decently well--by no means as well as we should, or as we as we ought to given the importance of the measurement and the time we've spent on it--but we rely on ... student surveys to monitor teaching effectiveness. We lack a well-defined progression of introductory, intermediate, and advanced courses comparable to what other disciplines have. (What should students learn in Introduction to Comparative Government? We don't have an answer to this question that we could explain to a congressman in the same way that economists could say what Intro Micro is about.)

Achieving comparability, however, would require some conscious moves toward standardization. Such measures would reduce the autonomy of the individual instructor. And it would also prompt us to begin to assess what the discipline is and what it should become. In one sense, that means picking winners and losers, at least at the level of undergraduate instruction. And that likely would require the formalization of much political-science instruction (graduating students who don't know the median-voter theorem or the differences in free-trade voting preferences of workers and capitalists should be acutely embarrassing). It might even mean dropping one subfield--or, better, prompting that subfield to more clearly engage with the questions and methods the rest of the field has adopted (something that would benefit both sides of the debate). The relationship between international studies and political science would be redefined, perhaps drastically.

At the end of such a process, though, the relationship between our instruction and our research would be much clearer. The risk of intellectual monoculture would be blunted by the fact that different institutions would naturally choose to focus on different parts of the field. And our students would likely have a clearer idea both of what to expect in graduate school (an IR Ph.D. is not Foreign Affairs with more footnotes) and also of what the key insights of political science really are.

22 July 2012

The Rise of the Brofessor: A Totally True Trend That Is Not Linkbait At All

|

| AWESOME CITATION BROSEPH |

That being said, I would also like to get a lot of traffic to this blog, and so I want to direct the attention of pro-feminist blogs like Jezebel and Gawker and kneejerk reactionary blogs like NRO's The Corner to this Totally True Trend Story: The rise of the brofessor.

Yes, brofessors are all around. Many of them go kegging on the weekends, where they chant their slogan "WORK HARD CITE HARD." Others can be seen participating in extreme sessions of the faculty Senate, where they arrive in togas and harass the guys from the Physics department by pretending to knife them in the back. Still more can be seen getting rejected by the women of the women's studies department, all of whom are lesbians, according to many brofessors.

After a brofessor has written a book and published three journal articles, he can apply for "T&A," "Tenure and Ascension." Tenure brings one part of the brofessor's life to a close, as he leaves the assistant prof house for an off-campus apartment, but as all brofessors know, chicks dig a man with the big T. And beyond tenure, some faculty soon seek to be promoted to Full Brofessor, at which point the party never stops. Some have even suggested that the level of Distinguished Brofessor allows them to unlock new achievements, such as the combination of "sextra credit" and "team teaching."

Anyway. Totally true. Contact me or my handy list of scholar-brothers, all of whom have the talking points needed to fill out your profile in (in order of our preference) the New York Times Sunday Style section, GQ, Esquire, the Saturday Wall Street Journal Personal Journal section, the Los Angeles Times Sunday magazine, Parade, or the New York Times Thursday Style section.

Bomb throwing

Projections about foreign policy in the next five years or whatever are pretty boring. Let's start talking about wildly irresponsible foreign policy projections--like over the next century--because then we can begin to get a feel for whether anything is missing from our models.

Consider how poorly realism has fared over the past century. To observers in 1912, the idea of a global conflict might have been plausible. But that the United Sates would be the world's dominant power, that China would be its principal competitor, and that the rest of the league tables for great-powerdom would be filled out by Russia, Japan, India, and so on would have been sheer lunacy, as would the notion that the greatest threat that Europe faced to its domestic tranquility was the possibility that its currency union was insufficiently deep.

Realism talks a good game, but it is shockingly bad at understanding the nonlinearities of economic growth. Liberalism is hardly better. Our major theories of international relations are not just theories of the middle range, they are largely theories of next week.

Consider how poorly realism has fared over the past century. To observers in 1912, the idea of a global conflict might have been plausible. But that the United Sates would be the world's dominant power, that China would be its principal competitor, and that the rest of the league tables for great-powerdom would be filled out by Russia, Japan, India, and so on would have been sheer lunacy, as would the notion that the greatest threat that Europe faced to its domestic tranquility was the possibility that its currency union was insufficiently deep.

Realism talks a good game, but it is shockingly bad at understanding the nonlinearities of economic growth. Liberalism is hardly better. Our major theories of international relations are not just theories of the middle range, they are largely theories of next week.

06 July 2012

This is how Tibet ends: Not with a bang but with a whimper

In the late 1990s, I strenuously denied even the idea of cultural genocide. I thought it was a silly misapplication of a loaded word that obscured the possibility of free choice.

Events in China have caused me to reverse that opinion.

Events in China have caused me to reverse that opinion.

02 July 2012

The World's Greatest Third-World Country

|

| Don't listen to anyone who says USA #2! |

And it’s not just rail, sewers and the water supply are another example. Consider:The answer, of course, is no. And the continuing power outages in the District and nearby cities only point to the astounding levels of failure that Americans implicitly tolerate. For more than a decade, it's been clear to just about all Americans who travel that we "enjoy" perhaps the worst infrastructure in the advanced world. Our cell phone service sucks. Our Internet sucks. Our trains suck big time. Our domestic airlines are so bad even non-traveling Americans notice. Our schools are frankly godawful. And from time to time giant sinkholes open up or transformers explode in our major metropolitan areas and we think, gee, maybe we ought to do something about that.

The average D.C. water pipe is 77 years old, but a great many were laid in the 19th century. Sewers are even older. Most should have been replaced decades ago.

Does that sound like the infrastructure of an advanced nation?

But we don't. It's sometimes hard for me to understand why the continuing ensuckening of much of American life has been met with resignation or calls for the state to withdraw from providing services instead of with calls for getting things done.

25 June 2012

That NYT op-ed

Ironically, political science as a discipline, and IR in particular, would be under much less attack if we went back to the bad old days of counting megatonnage.

And so, not for the first time, I recognize that everyone would be better off if they listened to more Randy Newman.

And so, not for the first time, I recognize that everyone would be better off if they listened to more Randy Newman.

24 June 2012

IR, SF, and Africa

|

| We need to update our images of Africa. |

Like a lot of Americans, I don't know a lot about Africa--just enough to know that "Africa" as a label obscures as much as it illuminates, much like "Europe." Nor is the almost reflexive use of the term "sub-Saharan Africa" any better. That phrase conveys as much about the societies, economies, and polities of the continent as the phrase "sub-Canadian America" would tell us about Maine, Arizona, and the Dutch-settled part of Iowa.

So I'm not the right person to talk about how we should update our images of Africa. But I do know enough--mostly from a combination of a handful of case studies and party from running a lot of data about the continent's countries--to know that the continent is rather diverse. And a very interesting BBC radio show about science fiction in Africa offers a particularly incisive way (for me, at least) of reflecting on how the writing of even non-authoritative texts (sf novels don't have the cultural cachet of textbooks or scholarly monographs!) constitutes a process of dominance.

22 June 2012

Notes from the King

|

| Some folks are rock stars. |

[T]he dissertation is not about writing 250 pages; it's about reorienting your life, making the transition from a student taking classes--doing what you're told--to an independent, active professional, regularly making contributions to the literature. To do this, you need to arrange your whole life, or at least the large professional portion of it, around this goal. This transition can be brutal, but it is crucial for far more than your dissertation. You must change not into a dissertation writer, but into a professional academic.Do not go to dissertation defenses (except your own!!); they are a waste of time. Go to all the job talks you can.Your goal is to answer the key question: whose mind are you going to change about what? ... Don't choose a "dissertation topic;" ... [y]our goal is to produce some clear results or arguments.It might take more time than you think; might require recasting your argument, recollecting your evidence, or reanalyzing your data. Don't get discouraged; they call it research, not search, for a reason! But get it done. In my experience, almost all dissertations are written in 4 months, although it takes some years to start, and sometimes requires some motivation, like a job offer. So get started.This process may sometimes seem like drudgery, and it is true that aside from all this you are also allowed to have a life! But do not forget that you are tremendously privileged to participate in science and academia and discovery and learning--by far the most exciting thing to 99 percent of the faculty here. The thrill of discovery, the adrenalin-producing ah-ha moments, etc., are more exciting than all the skiing and mountain biking you could possibly do in a lifetime. Don't miss how intoxicating and thrilling it all really is.

06 May 2012

Reichsbankprasident Hjalmar Horace Greeley Schacht

|

| Hjalmar Schact also starred in several Troma films. |

Anyway, what I can't get over is that his full name was Hjalmar Horace Greeley Schacht.

What the hell?

The source of all human knowledge says only the obvious, that Schacht's name was meant to honor the American journalist and politician Horace Greeley. But why on earth would good Germans/Danes want to honor the American journalist and politician Horace Greeley? There are, as far as I know, very few American children named for Josef Jaffe.

Apparently, his father had spent time in the United States and admired Greeley's abolitionism. Which hardly dampens the irony.

19 April 2012

Nobody cares about foreign policy

Above is a screenshot of the navigation bar from the Obama 2012 Web site.

What's really remarkable about this is that the only mention of international topics comes under the rubric "National Security."

I'm hardly a subscriber to the securitization literature, but there really does seem to be something to the fact that the Obama campaign--which is presumably thinking about how to attract liberal and independents--doesn't think about "Foreign Policy" as a valuable tool to that end. Instead, it thinks about "National Security."

There are two points to be made here for students of international relations.

What's really remarkable about this is that the only mention of international topics comes under the rubric "National Security."

I'm hardly a subscriber to the securitization literature, but there really does seem to be something to the fact that the Obama campaign--which is presumably thinking about how to attract liberal and independents--doesn't think about "Foreign Policy" as a valuable tool to that end. Instead, it thinks about "National Security."

There are two points to be made here for students of international relations.

09 April 2012

Drinking To Prosperity and Freedom [Updated]

|

| The sound, taste, and look of freedom and prosperity. A Bavarian barmaid serves beer during Oktoberfest. Source. |

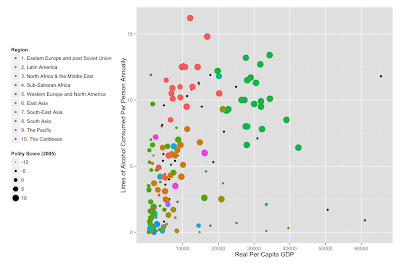

On the other hand, there's data! Thanks to the WTO, it's actually possible to do the sort of social science that horrifies PTJ but which involves numbers and statistics. Ergo, it's science.

So, I start with a simple question. Why do countries vary in their consumption of alcohol? We might think that there are two competing theories. The first is that alcohol consumption is largely culturally determined; the second is that alcohol consumption is a normal good, subject to the sort of demand and supply curves that affect all other goods.

(There will be no broad conclusions here, as I largely just wanted to have some experience merging datasets and playing around with some packages in Stata and R.)

We start with exploratory graphs. [UPDATE: I added a second plot, which I think is a little nicer.]

|

| Plot shows alcohol consumption plotted against per-capita GDP. Polity Score and Region from the Quality of Government dataset. |

08 April 2012

The price elasticity of labor-saving devices

Andrew Gelman passes along a great chart (via here and originally here):

In his post, Gelman praises the cleanliness of the graph, and I think that's fair--it's pretty. However, it's not obviously a great data visualization, since neither the color nor the type of the lines used convey any information beyond making the graph readable, which seems a waste (couldn't the colors had been chosen based on time-to-80% adoption or something?).

Like others, however, I find this a useful spur to thinking. In my case, looking at the S-shaped curves of adoption for most (but not all!) technologies, I thought of diffusion theorizing. In this case, what seems to be driving differential adoption rates is first the fact that relative prices for all of these innovations have fallen really far as per-capita GDP has risen and prices for manufactured goods have fallen. (Consider the "computer" in this context, which has surely changed the most of all of the innovations over time.)

Second, what is most obvious (to me, anyway) is the profound labor-price elasticity here.

07 April 2012

I don't think that word means what you think it means

Dear The Atlantic: "Matters" does not refer to anything in that box. Please adjust your editorial standards accordingly.

A Magnum is a period piece

One of the constants in my somewhat protean political affiliations has been a siding with what Europeans conceive of as an American form of justice--which is to say, something that approximates the criminal justice standards prevailing in late nineteenth-century Texas. The less-famous monologue from Dirty Harry expresses this pretty well:

[Harry is getting a dressing-down for his most recent arrest]

District Attorney Rothko: You're lucky I'm not indicting you for assault with intent to commit murder.

Harry Callahan: What?

District Attorney Rothko: Where the hell does it say that you've got a right to kick down doors, torture suspects, deny medical attention and legal counsel? Where have you been? Does Escobedo ring a bell? Miranda? I mean, you must have heard of the Fourth Amendment. What I'm saying is that man had rights.

Harry Callahan: Well, I'm all broken up over that man's rights! (Source)

So, yes, I like Dirty Harry even as I recognize that this pretty much makes me side with some fairly far right-wing affections. Of course, the world of Dirty Harry is right-wing pornography, in which "git tuff" policies actually work and criminality is a consquence of a diet low in moral fiber.

Now, that being said, it is a great work of right-wing pornography. And that is why I'm delighted to find Dirty Harry Filming Locations, which puts the film into real perspective.

Update: I'm following Dirty Harry with The Hunt for Red October, which is a similarly inflected film about honor, duty, and misguided liberal social systems. That's a joke. But the opening few minutes--with the "Hymn to Red October"--kind of makes me yearn for the bad old days.

[Harry is getting a dressing-down for his most recent arrest]

District Attorney Rothko: You're lucky I'm not indicting you for assault with intent to commit murder.

Harry Callahan: What?

District Attorney Rothko: Where the hell does it say that you've got a right to kick down doors, torture suspects, deny medical attention and legal counsel? Where have you been? Does Escobedo ring a bell? Miranda? I mean, you must have heard of the Fourth Amendment. What I'm saying is that man had rights.

Harry Callahan: Well, I'm all broken up over that man's rights! (Source)

So, yes, I like Dirty Harry even as I recognize that this pretty much makes me side with some fairly far right-wing affections. Of course, the world of Dirty Harry is right-wing pornography, in which "git tuff" policies actually work and criminality is a consquence of a diet low in moral fiber.

Now, that being said, it is a great work of right-wing pornography. And that is why I'm delighted to find Dirty Harry Filming Locations, which puts the film into real perspective.

Update: I'm following Dirty Harry with The Hunt for Red October, which is a similarly inflected film about honor, duty, and misguided liberal social systems. That's a joke. But the opening few minutes--with the "Hymn to Red October"--kind of makes me yearn for the bad old days.

28 March 2012

Leaving Gotham

|

| This is an ad for Obama. It could easily be an ad for Domino's pizza. As a party game, count the number of fonts: I see five, which is the same number of angels who wept when they saw this jpg. |

The Obama 2008 campaign's masterful handling of typefaces in branding the then-Illinois senator's campaign. Gotham is a magnificent font, summoning the best of the industrial-era firmness and streamlining of Art Deco with a mature and inspiring mixture of informality and strength.

It was perfect.

Therefore, Obama abandoned it.

At left is a Web advertisement from the New York Times. It is a travesty as shameful as the solicitor general's performance in oral arguments yesterday. It is needlessly filigreed, polluted by multiple typefaces, and inconsistent in its color schemes. Even the familiar Obama "O"--once the central point of the brand's visual identity--has been reduced almost to illegibility.

The White House Web site is, if anything, even worse.

|

| If you use six typefaces on your home page, the terrorists have won. |

Gotham makes a cameo in the bottom right, but the remainder of the Web site uses a mix of Georgia, Arial (goddamn ARIAL!), and some custom font I don't recognize. (In some circumstances, the designers were unclear whether they wanted serif or sans serif; the css sheets reveal that the order for certain elements is Georgia, then Arial or Helvetica, and then Verdana. Why not just use Comic Sans, Barack?)

The most glaring flaw, of course, is that terrible, Fox News-style red white and blue banner for "West Wing Week." It's bad enough that the Obama Elegant font for "West Wing Week" at top left places a weird visual emphasis, such that it reads as WEST WING WEEK, but the boring, Microsoft Word-looking sans serif banality of the banner is a betrayal of Jesus, NASCAR, and the Statue of Liberty.

25 March 2012

Global Divergence And Convergence

Let me talk about something I admit I know very little about to ask some questions I'm not sure IR has any good answers to.

The United Nations projects that over the coming century the global balance of population will radically shift, with Asia losing its longtime status as home to a majority of the world's population and Africa rising quickly to account for somewhere around a third of humanity, an increase from about 15 percent today. Individual countries show the trend in detail fairly well:

As is well known, China's fertility rates are being artificially depressed, while India's remain high. Consequently, it is likely that India will surpass China's total population, which is likely to peak sometime in the next twenty or thirty years, and will become the most populous country on earth by mid-century. What is unknown is just how populous India will be in 2100--the UN gives a range between about 900 million and 2.6 billion, which is to say that the UN's demographers have no idea.

For countries in the developed world, which for these purposes temporarily includes Russia, we have better understanding of how fertility is likely to behave, and so we can forecast demographics a little bit more precisely. The United States will continue to grow over the coming century, with a population mean forecast of 450 million and a range between 375 and 525 million, while countries such as the UK, France, and Italy will stay roughly in their current weight classes. Russia, by contrast, will almost certainly lose population.

How does international relations respond to challenges such as divergent population growth? Over the past century, such complications have been ignorable, because poor countries were normally also relatively small (Europe long accounted for about a quarter to a third of the world's population) while also fairly weak. But for the next several decades many such countries will be both growing their mass population while also growing their per-capita GDPs at rates that vastly outpace the Industrial Revolution.

I've put together another figure which shows changes in GDP per capita for selected countries and regions over the past millenium to illuminate the scale and speed of the changes we're seeing in global economics.

(The line for Western Europe and its colonial offshoots, such as the USA and Canada, is almost identical.)

This figure demonstrates that the past two centuries have been a period of exceptionally strong divergence between the "West" and the "Rest." It also suggests that this period is drawing to a close--for the first time since World War II, Western Europe's lead in economic development over the rest of the world is eroding, while for the first time since the early Qing Dynasty, China's GDP per capita is approaching the global mean.

So what does a world in which Europe is a rounding error look like? What happens when the Atlantic Community is less a security community and more a gated community--a small neighborhood of wealthy and aging people whose borders are patrolled by heavily armed guards? Do states that are growing so rapidly and yet have comparatively few resources behave in the same way that realism or liberalism expect? UPDATE: A better way of asking this is: Are our theories of IR as scalable as they purport to be?

I'm not sure that our theories of international relations have properly specified their operative assumptions in full.

The United Nations projects that over the coming century the global balance of population will radically shift, with Asia losing its longtime status as home to a majority of the world's population and Africa rising quickly to account for somewhere around a third of humanity, an increase from about 15 percent today. Individual countries show the trend in detail fairly well:

As is well known, China's fertility rates are being artificially depressed, while India's remain high. Consequently, it is likely that India will surpass China's total population, which is likely to peak sometime in the next twenty or thirty years, and will become the most populous country on earth by mid-century. What is unknown is just how populous India will be in 2100--the UN gives a range between about 900 million and 2.6 billion, which is to say that the UN's demographers have no idea.

What is less well known is how quickly many African countries are growing. Nigeria and Tanzania are forecast to be among the five most populous countries by the end of the century, and the UN's estimates for Nigeria (pictured) are, again, almost no help: the UN's mean forecast is that Nigeria's population will be about 650 million in 2100, but the range is somewhere between 275 million and 1.2 billion -- a margin of error roughly the size of Africa's total population today. (Even if we restrict ourselves to the 95 percent confidence intervals, we are still left with a range between 425 million and 1.1 billion.)

How does international relations respond to challenges such as divergent population growth? Over the past century, such complications have been ignorable, because poor countries were normally also relatively small (Europe long accounted for about a quarter to a third of the world's population) while also fairly weak. But for the next several decades many such countries will be both growing their mass population while also growing their per-capita GDPs at rates that vastly outpace the Industrial Revolution.

I've put together another figure which shows changes in GDP per capita for selected countries and regions over the past millenium to illuminate the scale and speed of the changes we're seeing in global economics.

(The line for Western Europe and its colonial offshoots, such as the USA and Canada, is almost identical.)

This figure demonstrates that the past two centuries have been a period of exceptionally strong divergence between the "West" and the "Rest." It also suggests that this period is drawing to a close--for the first time since World War II, Western Europe's lead in economic development over the rest of the world is eroding, while for the first time since the early Qing Dynasty, China's GDP per capita is approaching the global mean.

The notion of the "developed" world is one that has also proven malleable over time. Portugal, for instance, was a charter member of the OECD, but its economic development looks downright stagnant compared to South Korea. And, of course, Japan famously became the first non-Western nation to achieve Western levels of technological and economic development.

So what does a world in which Europe is a rounding error look like? What happens when the Atlantic Community is less a security community and more a gated community--a small neighborhood of wealthy and aging people whose borders are patrolled by heavily armed guards? Do states that are growing so rapidly and yet have comparatively few resources behave in the same way that realism or liberalism expect? UPDATE: A better way of asking this is: Are our theories of IR as scalable as they purport to be?

I'm not sure that our theories of international relations have properly specified their operative assumptions in full.

23 March 2012

Jobs: The fundamental metric of political worth

As election cycle heats up, my email inbox fills up with solicitations and denunciations from rival campaigns. Interestingly, it appears that candidates from both parties have converged on a solution to one of mankind's longest-standing philosophical problems: how to measure the intrinsic worth of political action.

This puzzle, which bedeviled Aristotle, Augustine, Machiavelli, Hegel, and Rawls, has a beguilingly simple answer--at least if contemporary American political advertisements are to be believed. It turns out that all policies, initiatives, actions, and elections can be judged according to one criterion:

Jobs.

A good policy is one that creates jobs. A bad policy is one that destroys jobs.

So simple! Were you worried about a policy's coarsening effect on the moral sensibilities of the country? Immaterial, as long as it creates jobs. The moral justification for launching a war of choice? Irrelevant, since the war creates jobs! The demise of public-spiritedness as our economy becomes a series of tournament-style rewards? It's only bad if it destroys jobs!

So, World War II: Very good. It created a lot of jobs. The American Revolution was probably bad, since it destroyed many jobs. The Civil War was good on a technicality: It created jobs, although in doing so it ruined many Southerners' asset portfolios, which otherwise would have been a bad thing. The Civil Rights movement? Neither good nor bad, since it had a net zero impact on jobs.

Some people have suggested that we might divide jobs into "good" or "bad" jobs, but this distinction--once prevalent--has long since disappeared. So we can no longer worry about whether good jobs are destroyed in favor of bad jobs. Because the only thing that matters is jobs.

This puzzle, which bedeviled Aristotle, Augustine, Machiavelli, Hegel, and Rawls, has a beguilingly simple answer--at least if contemporary American political advertisements are to be believed. It turns out that all policies, initiatives, actions, and elections can be judged according to one criterion:

Jobs.

A good policy is one that creates jobs. A bad policy is one that destroys jobs.

So simple! Were you worried about a policy's coarsening effect on the moral sensibilities of the country? Immaterial, as long as it creates jobs. The moral justification for launching a war of choice? Irrelevant, since the war creates jobs! The demise of public-spiritedness as our economy becomes a series of tournament-style rewards? It's only bad if it destroys jobs!

So, World War II: Very good. It created a lot of jobs. The American Revolution was probably bad, since it destroyed many jobs. The Civil War was good on a technicality: It created jobs, although in doing so it ruined many Southerners' asset portfolios, which otherwise would have been a bad thing. The Civil Rights movement? Neither good nor bad, since it had a net zero impact on jobs.

Some people have suggested that we might divide jobs into "good" or "bad" jobs, but this distinction--once prevalent--has long since disappeared. So we can no longer worry about whether good jobs are destroyed in favor of bad jobs. Because the only thing that matters is jobs.

21 March 2012

Of budgets and values

|

| It is shockingly hard to come up with an image to accompany a post about budgeting. |

Gordon Gekko's motto--"Greed, for lack of a better word, is good"--was willfully contrarian but hardly persuasive. Greed has the virtue of predictability, but it is not good unless incentives are extremely implausibly aligned.

But budgets are beautiful.

Budgets are beautiful because they are stark and truthful. They tell you everything you need to know about an organization. Does the organization assign resources politically or logically? Does the organization think hard about planning for contingencies? Does the organization recklessly commit all of its funding with no reserve?

Budgets are especially beautiful when they are based on lies.

Consider what Enron's balance sheet said about the organization. The budget was based on lies, and because it was based on lies you could tell that the organization was based on lies. A management that has to lie to make its numbers is an organization that's condemned to fail. (Although not necessarily quickly.)

But it's impossible to make those lies last forever. Eventually, revenues have to meet expenses. And eventually assets (and equity) have to total liabilities.

Organizations do lots of things that aren't beautiful, because they don't have to be true. Organizations sponsor charitable works, even when those organizations' missions are the antithesis of charity. Organizations pass resolutions declaring that they're in favor of women or civil rights, even when those organizations are opposed to both. And organizations say they hire and promote on merit, even when all the top-ranked people in an organization look and sound the same because they're from the same school, same town, same class.

But budgets can't be hypocritical. An organization either spends what it has on a priority or it doesn't. Budgets, therefore, are the ultimate statement of an organization's real values. If an organization values foresight and preparedness, the budget will reflect that. If it's a risky and venturesome organization, the budget will reflect that, too. And if the organization is rotten to its core, then the budget will reveal that, too.

22 February 2012

What's the count in the American empire debate?

As part of a project I'm working on this term, I ran these Google Scholar n-grams for a professor. (They aren't the first or even the most impressive ones I've run, but they are the ones that I'm most behind on sharing.)

The first corpus is a broad overview of the frequency of the use of the term "American empire" in Google's corpus of American English.

|

| "American empire", corpus: American English, 1850-2008 |

What emerges is a somewhat surprising pattern: There's a long decline over the nineteenth century, a blip following the acquisition of an actual American empire in 1898, and then a long plateau following the United States' inadvertent victory in World War I.

The decline that set in after the height of Vietnam War guilt is reversed almost immediately by the events of September 11, which sent discussions of "American empire" to an all-time high (that's right, even eclipsing the period of time when we stole--there's no other nice word for it--Hawai'i, Puerto Rico, the Philippines, and Guam!).

|

| "American empire", corpus: American English, 1968-2008 |

17 February 2012

16 February 2012

In torrential rains, you need an umbrella. For torrents of data, you need statistics

|

| Technically, "because I didn't have observational data." Working with experimental data requires you only to be able to calculate two means and look up a t-statistic on a table. |

and I surely can't be the only person who always says "Of the future!" in the same way that the announcers of the 1930s Flash Gordon serials would announce the impending arrival of aliens --

but that this torrent of data means that it will take vastly longer for historians to sort through the historical record.

He is wrong. It means precisely the opposite. It means that history is on the verge of becoming a quantified academic discipline.

The sensations Silbey is feeling have already been captured by an earlier historian, Henry Adams, who wrote of his visit to the Great Exposition of Paris:

He [Adams] cared little about his experiments and less about his statesmen, who seemed to him quite as ignorant as himself and, as a rule, no more honest; but he insisted on a relation of sequence. And if he could not reach it by one method, he would try as many methods as science knew. Satisfied that the sequence of men led to nothing and that the sequence of their society could lead no further, while the mere sequence of time was artificial, and the sequence of thought was chaos, he turned at last to the sequence of force; and thus it happened that, after ten years’ pursuit, he found himself lying in the Gallery of Machines at the Great Exposition of 1900, his historical neck broken by the sudden irruption of forces totally new.

Because it is strictly impossible for the human brain to cope with large amounts of data, this implies that in the age of big data we will have to turn to the tools we've devised to solve exactly that problem. And those tools are statistics.

It will not be human brains that directly run through each of the petabytes of data the US Air Force collects. It will be statistical software routines. And the historical record that the modal historian of the future confronts will be one that is mediated by statistical distributions. Inshallah, yes, this means that historians will debate whether a given event was caused by a process that follows a negative binomial or a Poisson distribution.

And scholarship will be better for it.

Mostly.

I do have the notes for my long-promised sequel to "What do quallys know, anyway?" , tentatively entitled "Quantoids don't know anything," and this will probably prompt me to finally finish it.

26 January 2012

Gregory House Is Not a Ph.D.

|

| Vikash erred in using pictures of Hugh Laurie to illustrate his post. Nobody wants to see that. |

As important as the topics raised in these posts are, they don't go far enough. Considering that one of the major points of Vikash's argument is about House's choice to be addicted, Phil, surprisingly, doesn't make the obvious point about Gary Becker's theory of rational addiction. Becker often gets slammed for this as being an example of rationalist thinking taken too far, but consider what happens when you decide to get drunk. Your actions are, essentially, logically similar to those of Becker's agent, with the caveat that in the morning (or, at least, by mid-afternoon) you will again be sober.

So alcohol is clearly a way by which agents choose to alter their own preference functions credibly and (in the moment) irreversibly. This is, it turns out, not so difficult to model mathematically. And I would argue that the discounting logic that goes into the question of Optimal Drunkenness (or Optimal Sponge) is something that actually cannot be expressed as well in words as in algebraic notation. (Indeed, words are always an inferior vector for expressing logical relationships if efficiency is our only criterion, and they are almost always strictly inferior in terms of their precision.)

But note that this is not an ontological or epistemological dispute. It is very precisely a methodological one, about the best ways for social scientists (and everyone else, really) to think about how to explore and to know the social universe. And here Phil's broader point stands. Even though one class of theorist managed to colonize formal logic first in the social science, there is no requirement that all theories expressed formally be of their ilk.

20 January 2012

Definitions matter, so please provide them

Immerman, An Empire for Liberty (2010, Princeton UP):

And why does the "Augustan" invention of bureaucracy (which, to readers of S.E. Finer's History of Government from the Earliest Times or, I don't know, Weber, would come as a surprise) that Zimmerman attributes to Michael Doyle's work two sentences later represent a phase change in "empire"? (Does that mean that we should call the "Augustan Threshold" the "Qin Revolution"?)

Some of my friends often claim they find historians hard to read because so many concepts are rendered informally or, worse, idiographically. I used to disagree with them. But the more I encounter academic historians seeking to make grand theoretical (or grand-theoretical) claims, the more I sympathize with their complaints.

Empire, as a noun, was value-free at the time the United States gained its independence. While its precise definition is elusive because of the problem of translation, it derived from the Latin imperium, which in English approximates the words rule and sovereignty. Hence its definition was functional or instrumental. Greeks used it to describe the relationship between the city-states that united to oppose the Persians (who also comprised an entity called an empire). But Athens exercised leadership over its fellow city-states; it did not really rule them.I am not trying to be difficult here, but I find this paragraph difficult to follow in a way that I rarely find (empirical) political science hard to understand. Why are we talking about imperium to describe the Athenians' relationship with the Delian League, when there are perfectly good words like hegemony to do that work instead? In what way was Athenian "leadership" different from Persian "rule"?

And why does the "Augustan" invention of bureaucracy (which, to readers of S.E. Finer's History of Government from the Earliest Times or, I don't know, Weber, would come as a surprise) that Zimmerman attributes to Michael Doyle's work two sentences later represent a phase change in "empire"? (Does that mean that we should call the "Augustan Threshold" the "Qin Revolution"?)

Some of my friends often claim they find historians hard to read because so many concepts are rendered informally or, worse, idiographically. I used to disagree with them. But the more I encounter academic historians seeking to make grand theoretical (or grand-theoretical) claims, the more I sympathize with their complaints.

19 January 2012

"That's important." versus "That's cool!"

|

| The epitome of cool. |

Like all overgeneralizations, that's an overly broad statement, but I want to push it a little. Without confessing too much to the Internet, I have to say that sometimes I find work published in political science journals tedious, even when I think that the substance is important. Conversely, sometimes I find work done in economics to be flat-out awesome, even when I think the substance is meaningless.

The difference is visceral. My response to work in the former category might be to dutifully file the article away if it's relevant to a project I'm working on, and then remember to put "(Author 2011)" in my lit review section. My response to work in the latter category is to email it to my friends, usually with a subject line like "Awesome!!!" and then a message like "Sweet identification strategy!!!!!" (Cf. evidence on educational sorting from the market for movie star marriages.)

So I'm pretty firmly in the awesome camp.

|

| This research design is going to be legend ... wait for it ... ary. |

Ideally, that means my projects would combine novel, exacting theory with testable implications that involved clear identification strategies and unique datasets or cases. And sometimes that happens! The converse is that in the worst-case scenario I could distracted by the awesome nature of a dataset and a case and try to back out a theory to justify spending time on it.

Fortunately, that really hasn't happened much since first year.

But it means that I really can't wake up in the morning determined to study something because it's important. It's the diamonds-water paradox of social science. Freakonomics (at least the early, peer-reviewed Levitt stuff) was awesome and often theoretically relevant; the reaction from the policymaking public (and often the disciplinary public too) was often "Who cares?" By contrast, another grinding, dull paper based on a novel dataset establishing some "important" and "worthy" fact is easier to justify to non-academics but leaves everyone except for your subfield stablemates bored to tears. (See all area studies journals, especially those below the first rank.)

I wish I were a guy motivated by importance. It seems like it would be more rewarding to talk about (say) nuclear nonproliferation if you thought your work could avert the deaths of millions. By contrast, I can't be the only guy (please say I'm not the only guy) to think that an accidental use of a WMD could be a really awesome treatment, since it would be at least as-if random. (I'm joking, mostly. But we all know that papers like Acemoglu and Robinson have used treatments that were fairly horrific to place in pretty good journals.)

So maybe it's a phase. But even if I grow out of it, I'm not sure that the discipline will.

13 January 2012

Google is incredibly smart

Well, there's no way that this Google search should have worked, but it did, and now I'm super-amazed at Google.

I think by now we've all experienced the "Google glitch," which is when you assume that Google knows exactly what you're talking about even though there's no way it should. For instance, the other day, I was looking at china patterns, and so I simply googled "china."

There were a lot of results I honestly didn't expect, because I somehow thought that Google would know I wanted to learn more about saucers and serving dishes. After all, it was sitting right there during the entire conversation!

But this is something else. This is Google understanding that "ajps" is "American Journal of Political Science." And that is amazing.

Also, a sure sign that Google + Siri + UAVs = Skynet.

But this is something else. This is Google understanding that "ajps" is "American Journal of Political Science." And that is amazing.

Also, a sure sign that Google + Siri + UAVs = Skynet.

10 January 2012

Nights in White Satin

The Cambridge Nights Web site is a great idea, but I can't help but notice:

Only white(ish) men have ideas?

Anyway, I prefer Blam Nights.

04 January 2012

"All our language has been taxed by war"

The news comes that Boeing will shut down its defense plant in Wichita, Kansas.

Allen Ginsberg would be ecstatic.

Allen Ginsberg would be ecstatic.

I'm an old man now, and a lonesome man in Kansas but not afraid to speak my lonesomeness in a car, because not only my lonesomeness it's Ours, all over America, O tender fellows-- & spoken lonesomeness is Prophecy in the moon 100 years ago or inthe middle of Kansas now..

02 January 2012

Scholars shouldn't host their professional Web sites on institutional servers

Why do scholars host their professional Web sites on institutional servers?

To be fair, I can understand why this is the default position for most scholars. First, unless you're a rare scholar--one of the top five in your field, the kind of scholar who has a better name brand than the institution at which you're located--then having myuniversity.edu/myname is more immediately attractive than having myname.com.

Second, as my fictitious Jewish grandfather might say, hosting your own site (even on someone else's server) means you're dealing with the FTPs and the logons and the HTML--who can understand it?

Third, academics are weird about self-promotion. Even though they live in a world entirely determined by reputation (think about it: we have anonymous peer review but we make sure that our names are on our articles and books!), they think that there's something unseemly about trying to ... advertise the work that they've dedicated their lives to. Much better, of course, to hope that someone takes the time to read their work in the Journal of Tiresome Drivel (newly included in SSCI), their monograph with North Kentucky Press, and their, um, anonymous blog.

...

So anyone who registers myname.com is obviously a self-promoter of the worst kind. Probably votes Republican, too.

But anyone who has bothered to set up a Web site on a university server has already probably overcome objection #2. (If you attend my institution, Prestig* U., you've proven yourself extremely fluent with Web technology ca. 1996 if you've managed to set up your Web site on our server.) And objection #3 is, ahem, pretty transparently dumb, too, since you're thinking about setting up a Web site on the Internet.

So Objection #1 is pretty much the biggest problem. But that's wrong. In fact, having an institutionally-affiliated Web site is probably a bigger problem in the long run. Why? Because your affiliation might change. And if it does, then you probably won't set up your Web site at your new institution as nicely as it had been at your old job. Think of it: after moving cross-country, with all that entails, are you really going to want to spend another evening recoding HTML or even sitting down with iWeb to cleanse all mentions of Former University from your site?

What that implies, in turn, is that you will put up a temporary site with some of the things that used to be on your Web site, with every intention of making it better in "the future," a mysterious time when you have the leisure to take care of such things, or, even worse, that you'll just let your Former University Web site keep going until some tight-fisted administrator kills your site and you have to scramble to upload everything to a hastily-written site on a new server. In either case, you won't get anything like the beneift you imagined when you first decided to host your working papers and (pirated) published articles. This applies most strongly to graduate students, of course, whose institutional affiliations are guaranteed to change and who have the most to gain from as their reputation grows.

*"Prestig" is not a good thing. Prestige is to "prestig" as the Nobel Prize in Physics is to the Bank of Sweden Memorial Prize in Economics.

01 January 2012

Subscribe to:

Posts (Atom)